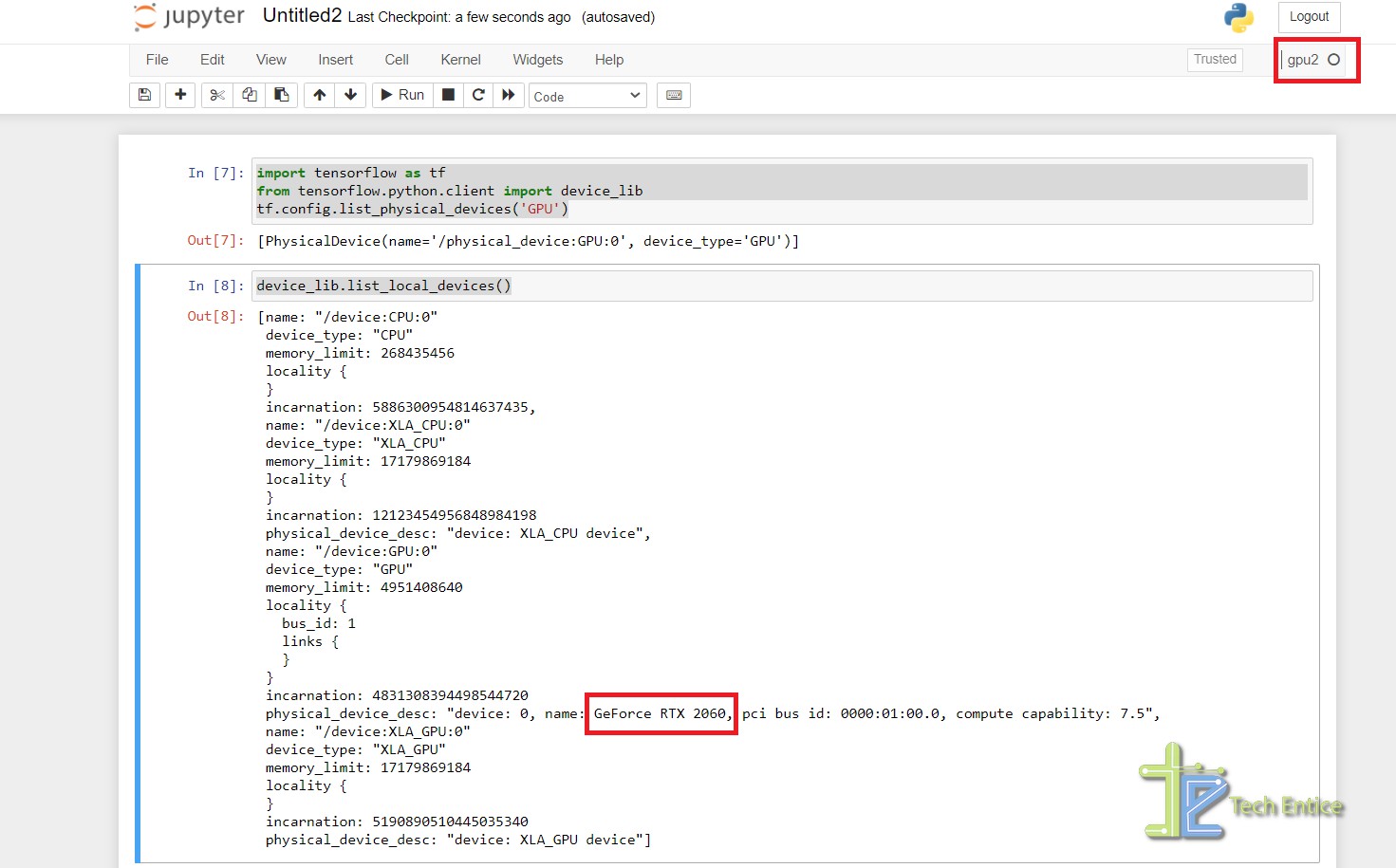

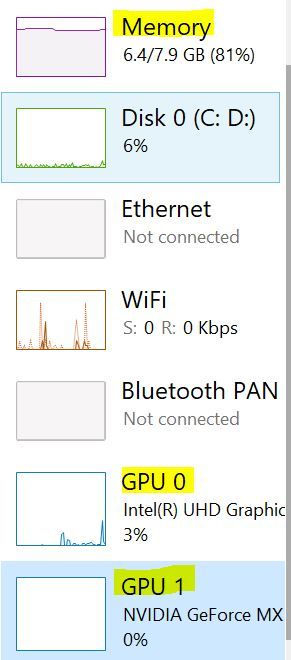

Is there any way to print out the gpu memory usage of a python program while it is running? - Stack Overflow

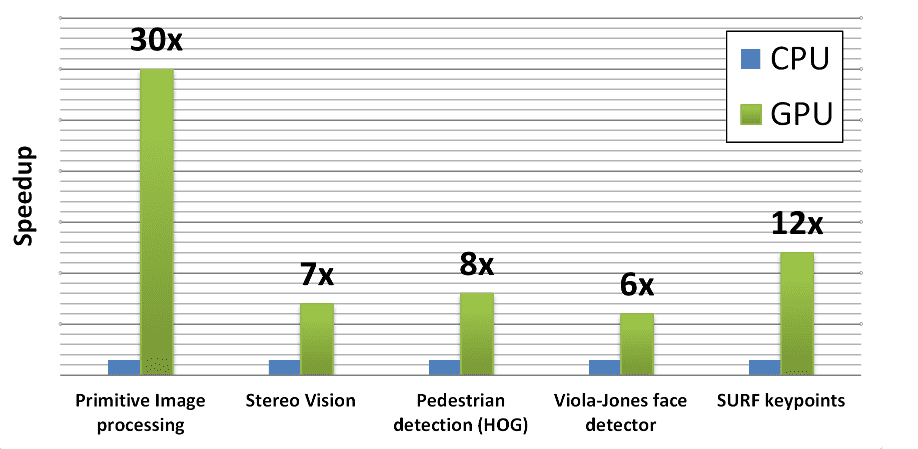

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Beyond CUDA: GPU Accelerated Python on Cross-Vendor Graphics Cards with Vulkan Kompute - TIB AV-Portal

Beyond CUDA: GPU Accelerated Python on Cross-Vendor Graphics Cards with Kompute and the Vulkan SDK - YouTube

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

why use GPU instead of CPU for temperature compensation? · Issue #28 · pimoroni/enviroplus-python · GitHub